Consequences

FIRST

Treating Data Movement as Purely a Plumbing Problem

Creating new pipelines takes weeks (not minutes)

Coordinating with application teams, hunting for the right tables, dealing with infrastructure complexity

Performance degrades over time

Queries get slower each month, costs keep rising, files multiply, teams lack expertise to optimize

Teams move data blindly

No security profiling, no quality context, no business meaning preserved during extraction

THEN

Addressing Governance and Optimization as Afterthoughts

Context already lost

Business meaning, lineage, producer-consumer relationships gone once data moves

Sensitive data already exposed

Scanning tools discover PII after it's already in your lakehouse, creating compliance violations

Performance already degrading

Wrong partitions chosen, file sprawl, query slowdowns—fixing problems after they've cost you

The impact

GDPR audits reveal PII in 14+ unexpected locations

Many dashboards break from a single schema change

4 engineers doing nothing but pipeline maintenance ($800k/year)

Finance, Sales, and Marketing report different revenue numbers from the same source

These aren't separate problems—they're the consequence of treating data movement and data intelligence as disconnected concerns.

how it works

Orka Takes a Fundamentally Different Approach

Intelligence integrated across the entire pipeline lifecycle, from extraction through consumption

Instead of separate tools discovering problems after data moves,

Orka embeds intelligence at every stage:

01

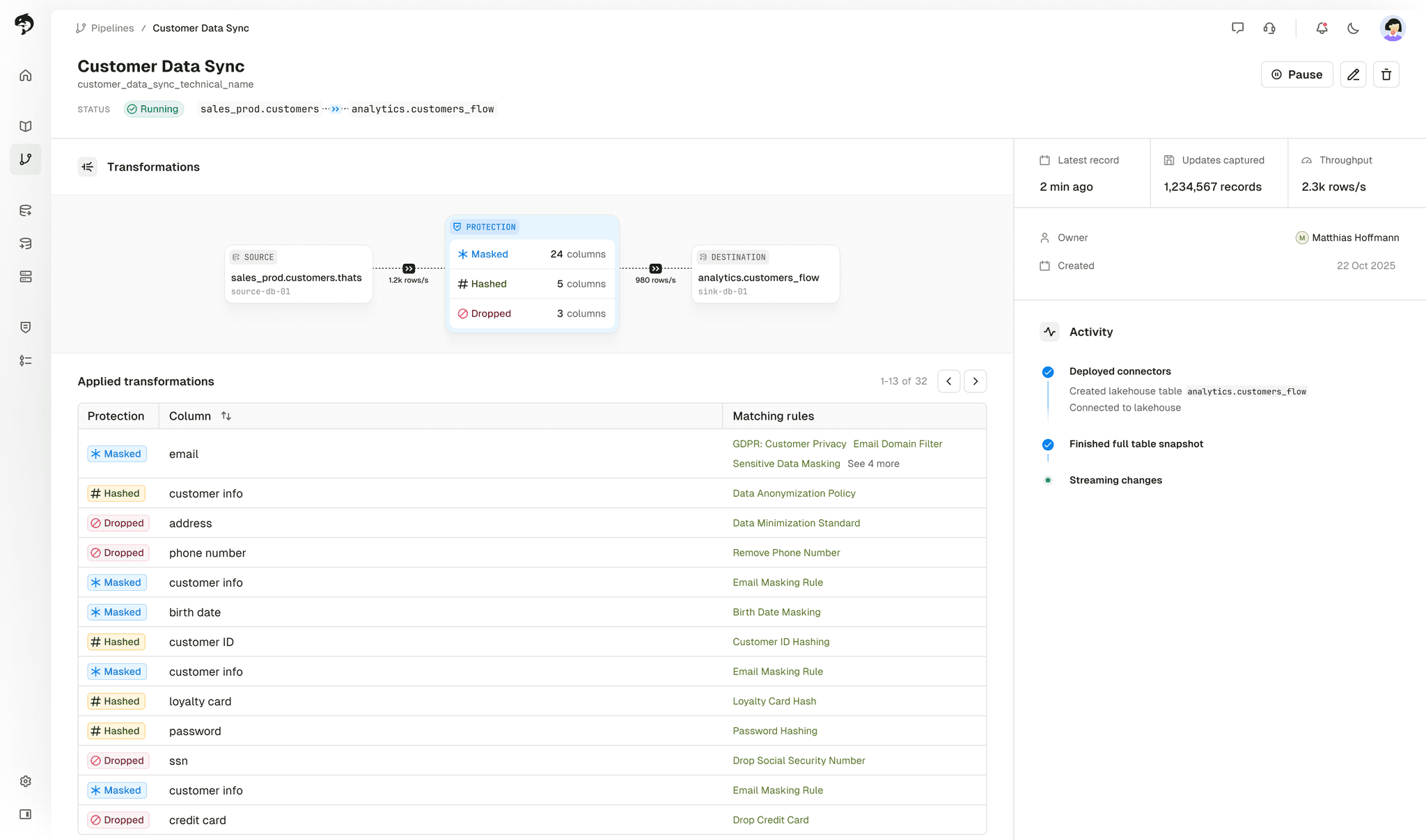

Profiling BEFORE extraction

02

Adapting DURING delivery

03

Continuously optimizing based on ACTUAL usage

Traditional tools: Extract data first → THEN add intelligence/security/optimization

Intelligent

Profiles data quality while moving

Optimizes layouts automatically

Gets smarter over time

Secure

Detects sensitive data at extraction

Tracks complete lineage

Prevents exposure before it happens

Resilient

Adapts to schema changes automatically

Alerts producers and consumers

Keeps data flowing without manual fixes

Orka helps data leaders unlock operational data for AI and analytics.

Self-Service Data Catalog

When data access requests take 3 weeks and finding the right table requires expert knowledge, Orka provides instant data discovery and automated access.

Teams browse available operational data, see complete profiling and lineage, and get secure access in hours with all security policies automatically enforced.

What this means for you

Analytics teams self-serve without waiting on data engineering

Find the right data in minutes, not weeks

Business context preserved from source to consumption

Secure-by-Design Data Movement

When sensitive data blocks 80% of analytics requests and GDPR audits reveal PII in unexpected locations, Orka's zero-trust architecture automatically detects and protects sensitive data at extraction before it ever leaves your operational databases.

Unlike post-movement masking that leaves exposure windows, Orka ensures PII is masked, financial data is tokenized, and proprietary information is blocked at the source.

What this means for you

Security teams trust data movement without retroactive scanning

Compliance violations prevented before data moves

No exposure window between extraction and protection

Adaptative Pipelines

When schema changes break dashboards and require several engineers for constant maintenance, Orka's adaptive pipelines detect changes and adjust automatically.

Your ML models keep receiving features, dashboards stay up-to-date, and data keeps flowing even as operational databases evolve, without manual intervention.

What this means for you

Zero-downtime schema evolution

Engineering time freed from pipeline firefighting

Downstream systems stay operational through database changes

Complete Data Lineage

When auditors need proof of data handling and teams can't trace data quality issues, Orka provides end-to-end visibility of every data movement.

Track exactly what was extracted, how it was protected, where it went, and who accessed it.

What this means for you

Instant audit readiness with complete protection tracking

Trace data quality issues back to source

Understand downstream impact before making changes

Continuous Optimization

When traditional pipelines degrade over time - files multiply, queries slow, and costs rise - Orka learns from query patterns to optimize how data is stored in the lakehouse.

The system automatically tunes file layouts and partitions, detects unused data and adjusts what gets moved, reducing waste and cost. Data movement improves over time instead of degrading—each query teaches the system what matters.

What this means for you

Compute costs decrease as usage increases

Query performance improves automatically

No specialized tuning expertise required

Universal Data Delivery

When operational data needs to reach many destinations with different security requirements, Orka creates a single governed pipeline that intelligently routes protected data everywhere.

Analytics teams get clean data in Snowflake, ML systems receive real-time features, AI platforms access up-to-date signals, and partners consume tokenized data, all without building custom integrations.

What this means for you

Security enforced consistently wherever data flows

Reduce complexity of managing multiple extraction points

Deliver data to analytics, ML, and operational systems with confidence in protection

Connects any operational database (Oracle, SQL Server, DB2, PostgreSQL, MongoDB, MySQL) to any lakehouse (Snowflake, Databricks, BigQuery) or data platform (Iceberg, Delta Lake).

our approach

Shrinking expertise requirements

Intelligence built into the platform eliminates the need for specialized optimization skills. What previously required data engineers with deep lakehouse expertise now happens automatically.

Compounding Efficiencies

Traditional pipelines degrade - files multiply, queries slow, costs rise. Orka improves over time. Each query teaches the system what matters, automatically optimizing layouts, partitions, and refresh patterns.

Prevention vs Remediation

Catch issues at extraction, not after they've created downstream costs. Sensitive data detected before moving. Performance optimized before queries slow. Schema changes handled before pipelines break.

Why this Matters

Traditional data pipelines degrade over time. Orka improves. Here's what that means.

Accelerate Time-to-Data

From weeks to hours with self-service access and automated security controls

Prevent Data Incidents

50% fewer data breaches through field-level protection before movement

Reduce Engineering Overhead

70% reduction in pipeline maintenance and creation work

Eliminate Pipeline Failures

75% reduction in schema-change related breakages

Increase Use-Case Activation

5x more AI, analytics, and reporting projects delivered

Ensure Continuous Compliance

Instant audit readiness with complete lineage and protection tracking